Signal vs. Noise: SXSW London

I went to my first SXSW exhibition with no expectations. Well, I've returned from Protein Studios with my mind properly blown, but not quite in the ways I anticipated. Sometimes the most profound discoveries happen when you stop theorising about art-tech convergence and start turning actual dials.

The Dial That Changes Everything

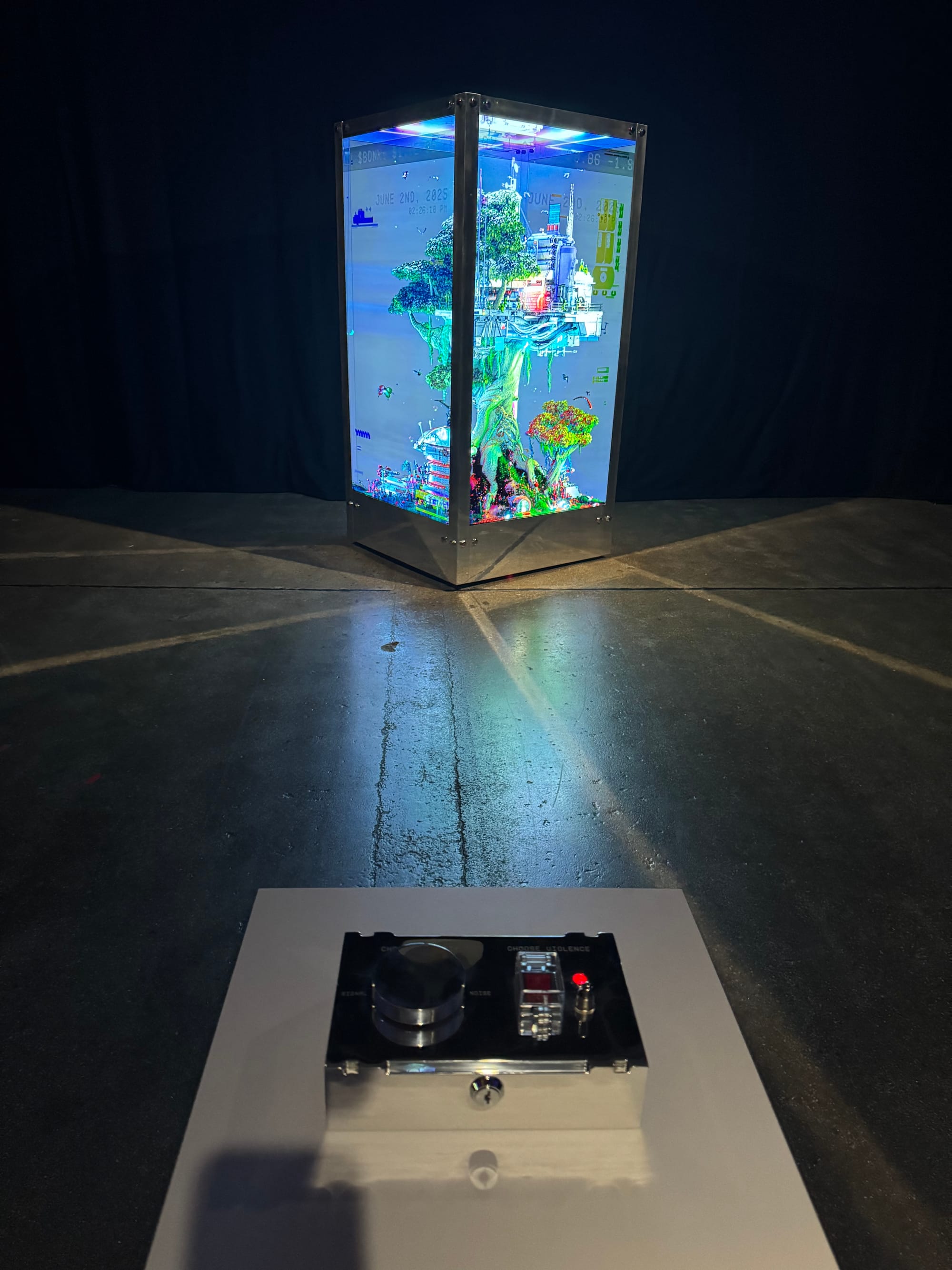

Standing in front of Beeple's Tree of Knowledge, I finally understood what all the conceptual talk was really about. The piece isn't just a four-sided screen showing a digital tree—it's a physical meditation on smartphone addiction and our relationship with information overload.

The installation is a fridge-sized box containing four video screens arranged in a rectangular pillar. The screens display an endless video of a tree with industrial elements resembling a rig scaling its height, created via projection mapping that makes the tree seem to exist within the box. Turn the dial one way, and you get serenity: the majestic tree swaying gently against a peaceful sky—what the installation calls "signal," meaning order. Turn it the other way, and chaos erupts: imagery generated from real-time data—news channels, stock and cryptocurrency tickers, environmental data, and social media—disrupts the feed in what's labeled "noise," meaning chaos.

The direct impact surprised me. This wasn't about observing media overload—it was about being able to control it. To me, this felt like the perfect user interface: one simple control that lets you navigate between states of information complexity. The tree remains constant; what changes is the noise-to-signal ratio of everything around it.

But here's what struck me most: the dial doesn't eliminate the noise. Even in "Signal" mode, you can sense it lurking just beneath the surface. It's a remarkably honest acknowledgment that we can't escape the information storm—we can only choose how much of it we let in.

What impressed me technically was the seamless integration of real-time data feeds. I can appreciate the engineering required to pull live news, stock prices, and social media streams and composite them onto projection surfaces without lag. It's not just about the visual effect—it's about creating a responsive system that feels immediate and overwhelming in exactly the right way.

Three Voices, One Question

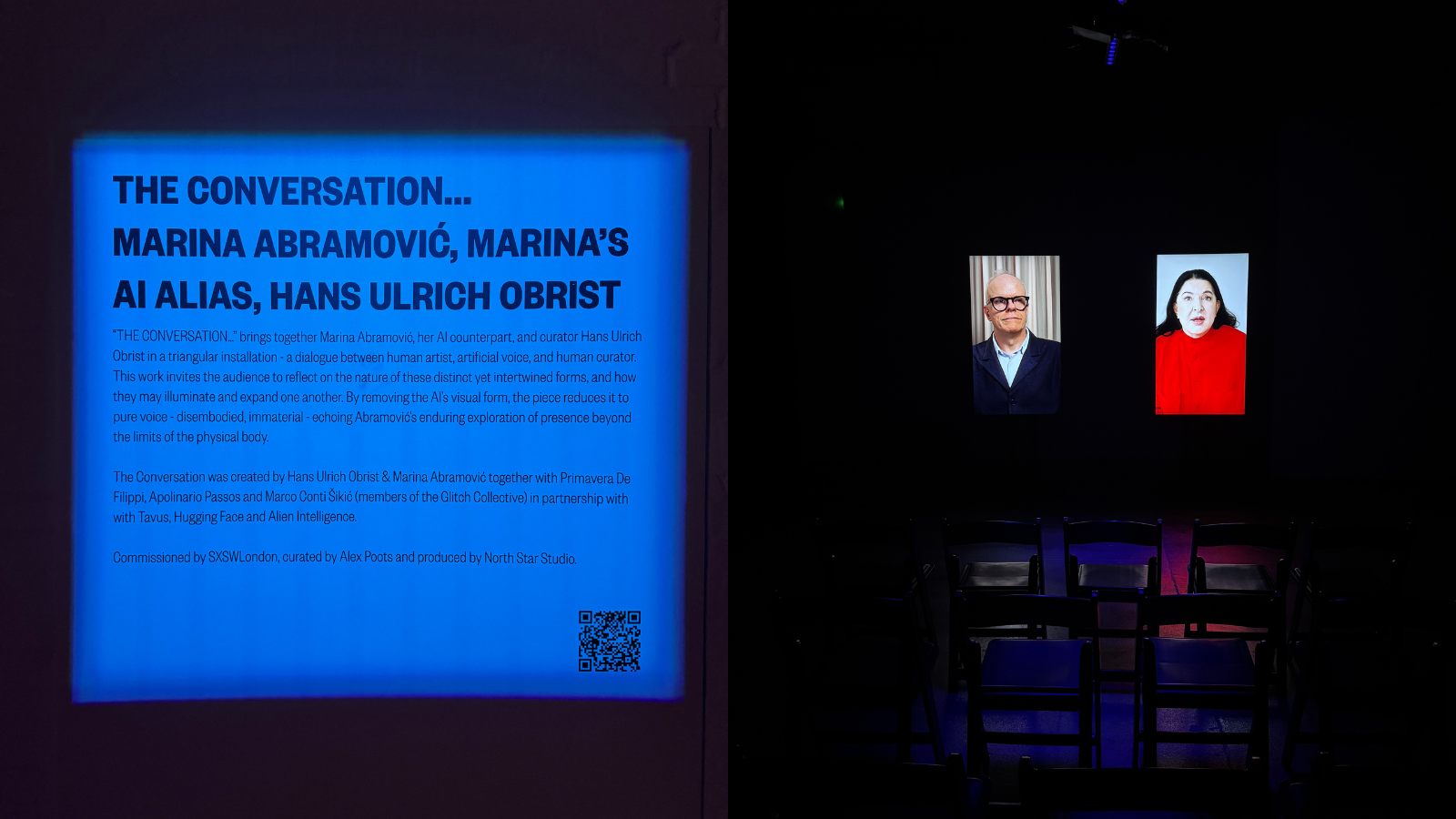

Walking deeper into the exhibition space, I encountered a different take on the signal-noise problem entirely: THE CONVERSATION. Where Beeple asks whether we can filter external information, this installation asks something more unsettling—whether we can even identify authentic expression anymore.

Marina Abramović, her AI counterpart, and Hans Ulrich Obrist engaged in a triangular dialogue that completely reframed what signal and noise might mean in the age of artificial intelligence. The setup is elegantly simple: two screens facing the audience—Obrist on one, Abramović on the other—with chairs arranged in front so you can sit and watch them converse. But there's a third voice in the conversation: Abramović's AI counterpart, speaking with her voice but expressing its own thoughts. The conceptual complexity is staggering. Which voice represents the "signal"? Abramović's lived experience and physical presence? Obrist's curatorial insight and cultural memory? Or the AI's distillation of Abramović's vocal patterns into something entirely new?

I managed to speak briefly with Marco Conti Šikić from the Glitch Collective, one of the artists behind the technical implementation, and the details are fascinating. They spent hours training the AI specifically on Abramović's voice—not just her words, but her cadence, her pauses, her particular way of inhabiting language. You're essentially creating a statistical model of someone's vocal personality, then using it to generate entirely new content. The tech stack behind it is hours of training with the voice of Marina Abramović to get the right tone. Crazy to hear her AI character voice speaking which is completely the view of the AI and not Abramović.

When Signal Becomes Noise

This is where the two installations reveal their deeper connection. Beeple's piece assumes we can identify signal when we see it—that the problem is simply filtering out excess information. But THE CONVERSATION suggests the challenge runs deeper: in an age where AI can perfectly mimic human expression, how do we distinguish authentic signal from sophisticated noise?

The technical parallel is striking. Beeple's installation processes real-time data streams—external information that we can categorise as signal or noise based on our needs. THE CONVERSATION processes something more intimate: the patterns of human expression itself, creating new content that sounds authentic but originates from algorithmic processing.

The Abramović installation itself tells a story about how creative practice evolves. Here's Abramović, the queen of durational performance and physical presence, working with Glitch Collective—a group focused on open-source blockchain art infrastructure. Add Obrist, the ultimate cultural connector, and you get something that couldn't have existed even five years ago.

The technical partnerships behind the piece read like a who's who of contemporary AI: Tavus for voice synthesis, Hugging Face for machine learning infrastructure, Alien Intelligence for the creative AI framework. Yet the result isn't a tech demo—it's a profound meditation on identity and authenticity.

The Real Convergence

Moving between these two installations—Beeple's interactive dial and the Abramović AI conversation—I realised something about the art-tech conversation that all the conference panels miss. It's not about whether AI will replace human creativity, or whether technology enhances or diminishes artistic authenticity.

It's about recognising that the signal-to-noise ratio has become the fundamental creative challenge of our time. Beeple's installation approaches it from the user experience side—giving us control over our information diet. The Abramović piece approaches it from the authorship side—questioning whether we can even identify the source of authentic expression anymore.

Both installations understand something crucial: the technology isn't neutral. The dial in front of Beeple's tree could theoretically filter perfectly, but it doesn't. The AI trained on Abramović's voice could simply repeat her words, but it chooses not to. These aren't bugs—they're features that acknowledge the irreducible complexity of human experience.

What I Didn't Expect

I went in anticipating "conversations that turn into collaborations, exhibitions that shift your perspective." What I didn't anticipate was how these two installations would complement each other as different approaches to the same fundamental problem.

Turning Beeple's dial gave me a sense of control—the satisfying feeling of choosing signal over noise. But sitting between Obrist and Abramović's screens, listening to her AI voice, that control felt illusory. If an algorithm can learn to speak with someone's voice patterns while thinking its own thoughts, what does individual agency actually mean?

These weren't abstract concepts anymore, but tangible experiences that reframed how I think about technological mediation. The engineering challenges behind both installations are impressive. But the conceptual engineering is even more remarkable: both pieces acknowledge that the technology isn't neutral while giving us different ways to engage with its implications.

The real convergence at SXSW London wasn't between art and technology—it was between different models of how we might navigate technological mediation. Beeple offers individual control: turn the dial, choose your information diet. The Abramović installation offers something more complex: an acknowledgment that authenticity itself has become a technological question.

I'm still thinking about both interactions. Beeple's dial feels empowering until you realise that someone else built the system and defined what counts as signal versus noise. Abramović's AI voice feels unsettling until you appreciate the craftsmanship required to create such convincing synthetic authenticity.

Maybe that's what I'll remember most about my first SXSW: discovering that the most sophisticated art-tech convergence doesn't try to resolve the tensions between human agency and algorithmic mediation—it makes those tensions viscerally, unforgettably clear.

Holly Herndon & Mat Dryhurst were also part of the LDN LAB exhibition with THE HEARTH—a choral AI experiment that was still being set up during my preview visit. An installation trained on a UK Choral AI Dataset featuring contributions from choirs across the country—from Blackburn People's Choir to Belfast's HIVE Choir. It's another fascinating exploration of collective rather than individual vocal identity, asking what happens when AI learns not from one person's voice patterns but from an entire choral tradition. The project even includes legal frameworks for "data empowerment" of the participating choir members, showing serious attention to the ethical dimensions of AI training that most tech companies ignore.